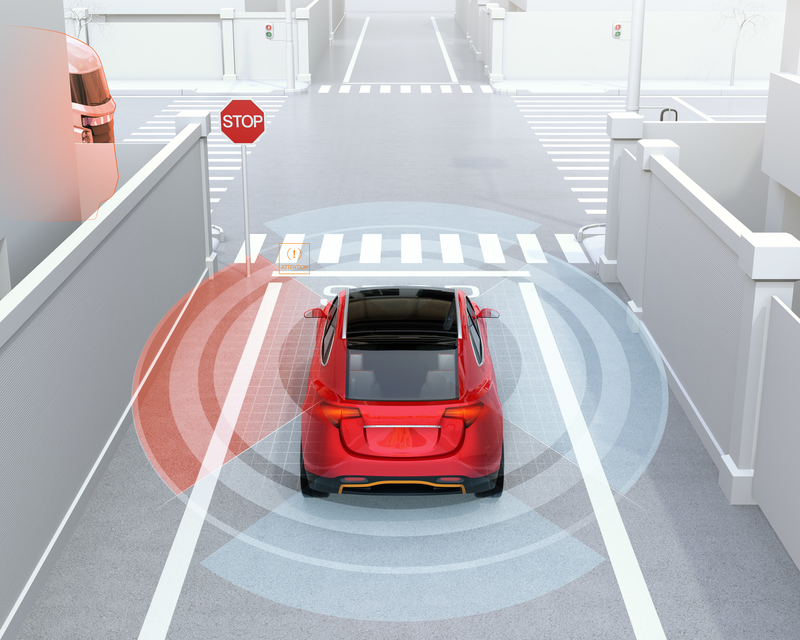

Researchers at Israel’s Ben Gurion University (BGU) say this is because advanced driver assistance systems (ADAS) in semi- or fully-autonomous vehicles (AVs) consider these depthless projections as real objects.

PhD student Ben Nassi says: “This is not a bug. This is not the result of poor code implementation. This is a fundamental flaw in object detectors that essentially use feature matching for detecting visual objects and were not trained to distinguish between real and fake objects. This type of attack is currently not taken into consideration by the automobile industry.”

The ‘Phantom of the ADAS’ project also showed that attackers can fool a driving assistance system into believing fake road signs are real by distinguishing phantoms for 125 milliseconds in advertisements presented on digital billboards near roads.

He says a shortage of vehicular communication systems which connect cars to each other and the surrounding infrastructure is creating a “validation gap”, which prevents AVs from validating their virtual perception with a third party.

More alarmingly, Nassi warns that remote attacks do not need to be carried out by skilled hackers who exploit the validation gap as the project demonstrated how such an attack can be carried out by projecting a phantom road sign from a drone.

BGU researchers are now developing a convolutional neural network model that analyses a detected object’s contextual, surface and reflected light, which is capable of detecting phantoms with high accuracy.